.jpg)

Legacy modernization hurts because the risk is real: behavior is undocumented, tests are missing, and critical knowledge lives in a few people’s heads. That’s why many teams keep “living with it” — nobody wants to be the person who breaks production.

But legacy code modernization using AI is now practical when you use it for the right jobs: discovery, dependency mapping, test generation (with review), incremental refactors, and measurable quality gates. BCG explains how GenAI is rewriting legacy tech modernization rules, especially by accelerating discovery and increasing transparency — exactly what teams need before touching fragile legacy systems.

In other words, AI for legacy code modernization works when you treat AI like an accelerator inside a controlled engineering workflow — not like a rewrite button. This guide shows the approaches, a decision matrix, a safe 9-step workflow with gates, tool categories that matter, common failure modes, and KPIs.

AI-assisted legacy code modernization does not mean “press a button and get a new system.” It means AI helps you:

Engineers discussing legacy code modernization using AI often focus on a grounded goal: reduce uncertainty by extracting behavior and business rules before refactoring. A useful practitioner perspective is captured in the LLMDevs article.

Use this when the system must keep running and replacement isn’t feasible. AI can help generate characterization tests, identify duplication, and propose safe refactor steps — but only after you lock behavior.

Best for monoliths with many integrations. You carve off seams, put stable contracts around slices, and replace gradually. AI for legacy code modernization helps by mapping dependencies, suggesting seam candidates, and drafting interface documentation.

Use this when specific components are blocked by platform constraints (EOL runtimes, scaling limits, operational pain). AI supports assessment, migration notes, and mechanical upgrade help — but architecture decisions remain human work.

Useful for framework upgrades or language transitions where changes are mechanical. This is where legacy code modernizations using GenAI can look impressive — and still be risky if you skip tests and controlled releases.

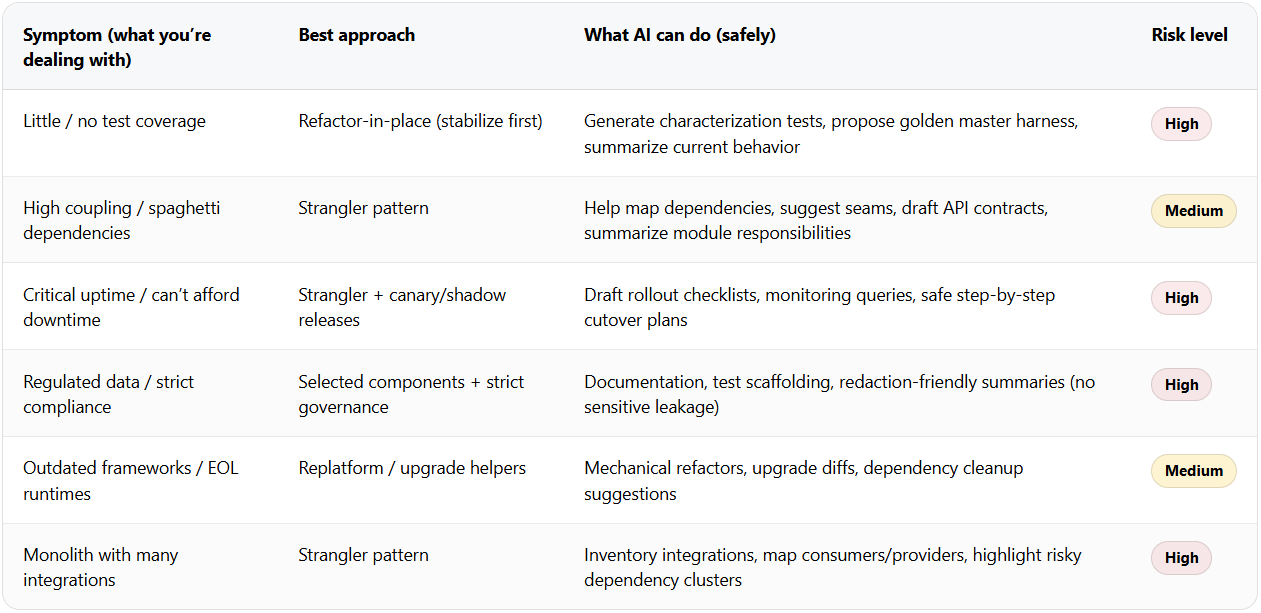

Use this matrix to avoid the #1 mistake: picking a modernization path that doesn’t match your system’s symptoms.

If you’re unsure, default to the approach that reduces uncertainty first (inventory + baseline), not the one that promises the biggest rewrite. That’s the safest way to scale legacy code modernization using AI.

Define the “do-not-break” list: key outputs, public interfaces, regulatory constraints, performance budgets, and the business flows that keep the lights on.

Quality gate: invariants are written down before any AI-generated code lands.

Use AI to summarize modules, identify integration points, and propose a dependency graph — then validate it with real signals (logs, traffic, runtime traces, deployment configs).

Quality gate: important dependencies are confirmed by evidence, not only AI guesses.

Create a baseline that captures current behavior on representative inputs (even if behavior is weird). This prevents “silent improvement” that breaks users.

Quality gate: baseline tests are stable, reproducible, and run in CI.

Let AI propose tests, edge cases, and fixtures — but treat tests like production code: review them, name them clearly, and ensure they assert the right invariants.

Quality gate: critical flows have coverage you trust (not just a coverage %), and tests fail on behavior drift.

Break work into PRs reviewers can fully understand. AI encourages big diffs — your process should force small ones.

Quality gate: one PR = one intent; reviewers can explain the change without a long meeting.

Wire CI so refactors can’t merge unless baseline + unit/integration checks pass. AI speed is useless if regression is manual.

Quality gate: CI blocks merges on regressions (no “temporary bypass” culture).

Run SAST, dependency scanning/SBOM, and secrets scanning regardless of how “clean” the diff looks. Human review and AI review do not replace security tooling.

Quality gate: security checks are mandatory, repeatable, and produce actionable output.

Deploy changes to a small slice or a parallel “shadow” path, compare results, and only then ramp up.

Quality gate: explicit rollout metrics + rollback triggers exist (not “we’ll watch it”).

Monitor error rates, latency, and business signals. If something drifts, roll back fast and learn.

Quality gate: rollback is rehearsed, quick, and owned (names, not teams).

AI makes modernization faster — the gates are what make it safe. This is where ai for legacy code modernization delivers value without turning production into your test environment.

When teams ask for AI tools for legacy code modernization, category-level thinking is more useful than brand lists:

Even government modernization efforts are leaning on AI to handle legacy complexity — but with strict verification and governance. GitLab’s breakdown of how AI can fix government’s legacy code problem offers a useful real-world framing.

If you’re planning legacy code modernization using AI, the fastest safe start is a short “risk-first setup”: map dependencies, lock behavior, add CI/security gates, and modernize in slices.

If you want help setting up this workflow without slowing delivery, explore CodeGeeks Solutions and check independent feedback on Clutch reviews.

Legacy code modernization using AI isn’t about replacing engineering judgment. It’s about reducing uncertainty: making dependencies visible, locking behavior, accelerating test scaffolding, and executing refactors incrementally with strong quality gates. Do that, and you modernize faster — without gambling with production.

When you can’t validate behavior (no tests and no ability to build a baseline), when changes touch high-risk domains (auth/crypto/payments), or when compliance rules prohibit sharing code context without a governed setup.

Start with golden master/characterization tests around critical flows, not broad coverage targets. Baseline behavior first, then modernize in small slices.

Characterization tests + CI regression gates + production validation (shadow/canary) and monitoring of key business/technical metrics.

Repo-level summarization/search and dependency mapping typically deliver the fastest early wins, followed by AI-assisted test scaffolding — as long as humans review outputs.

Use strict governance: avoid secrets/PII in prompts, prefer controlled environments where required, and run independent security checks (SAST, dependency scans, secrets scanning) on every change.